|

|

| Yinghao | zhangyinghao@berkeley.edu |

| Yifan Wang | wyf020803@berkeley.edu |

| Tianzhe Chu | chutzh@berkeley.edu |

| Xueyang Yu | yuxy@berkeley.edu |

Our proposed project aims to implement the conversion from point cloud to mesh formats. By implementing this conversion process, we aim to enhance the flexibility and compatibility of 3D object representation in various applications, enabling users to work with the format that suits their needs best. We implement the paper mentioned in the Final Project Idea.

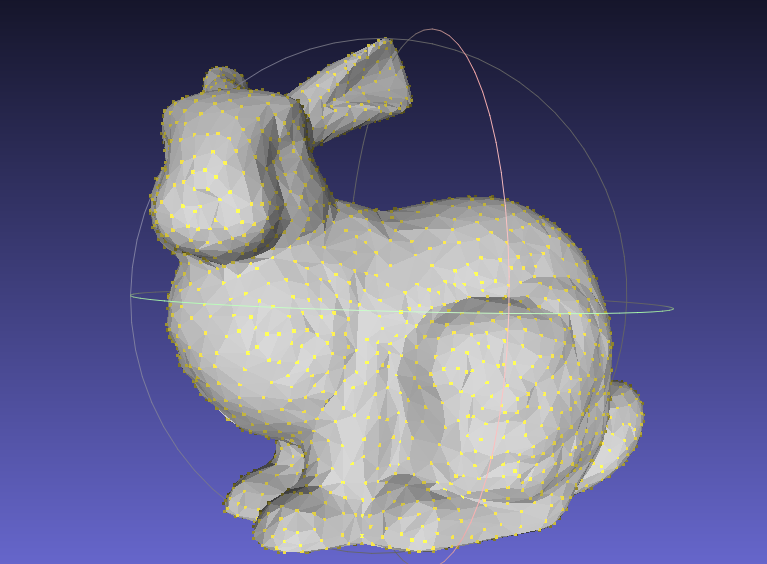

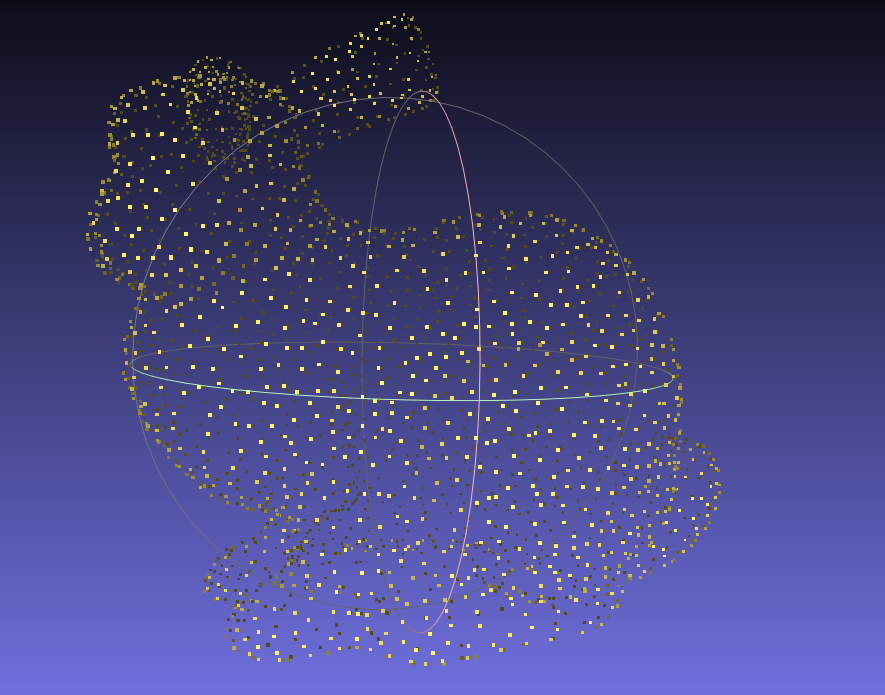

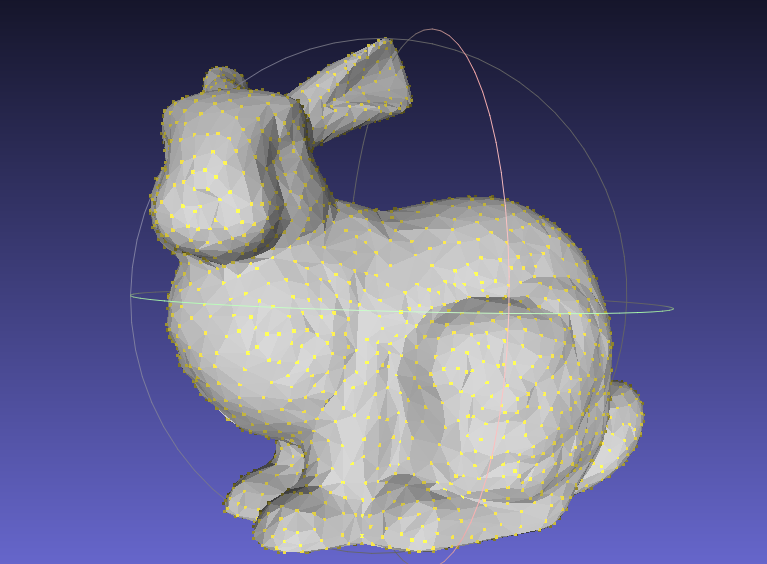

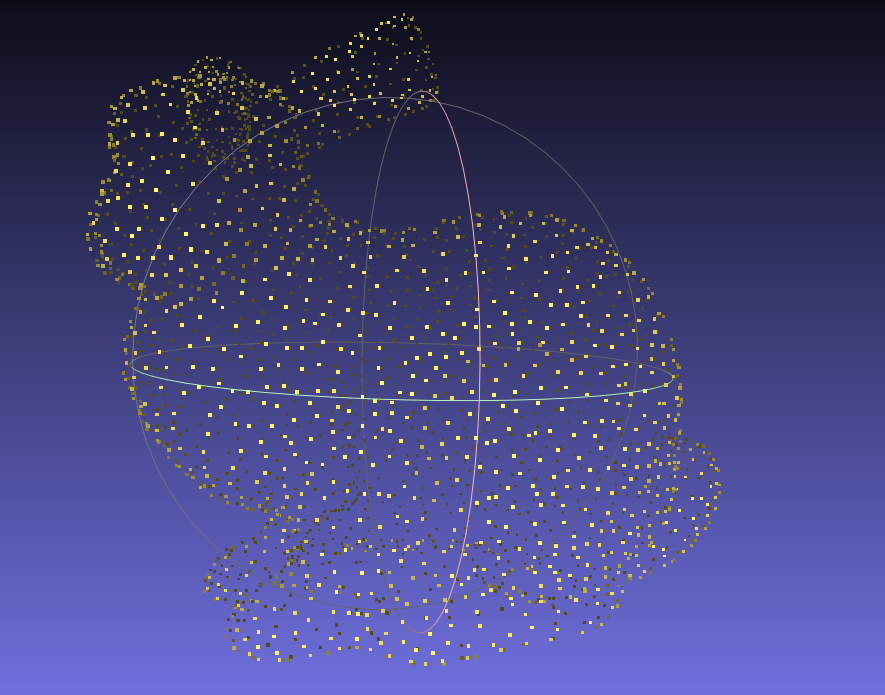

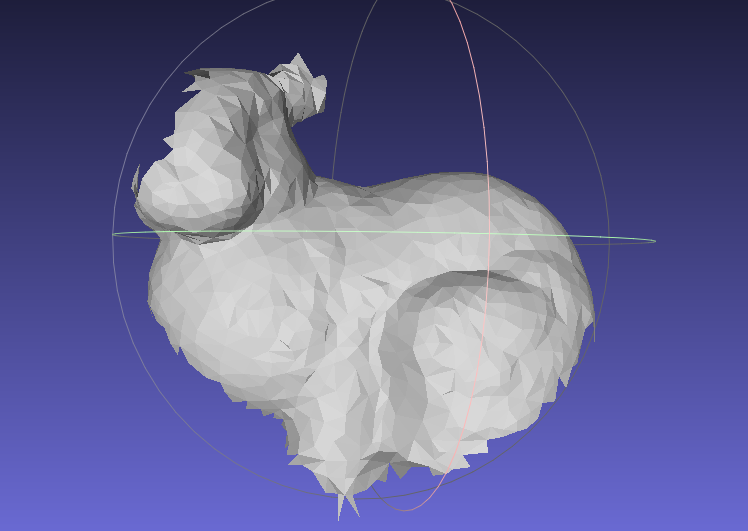

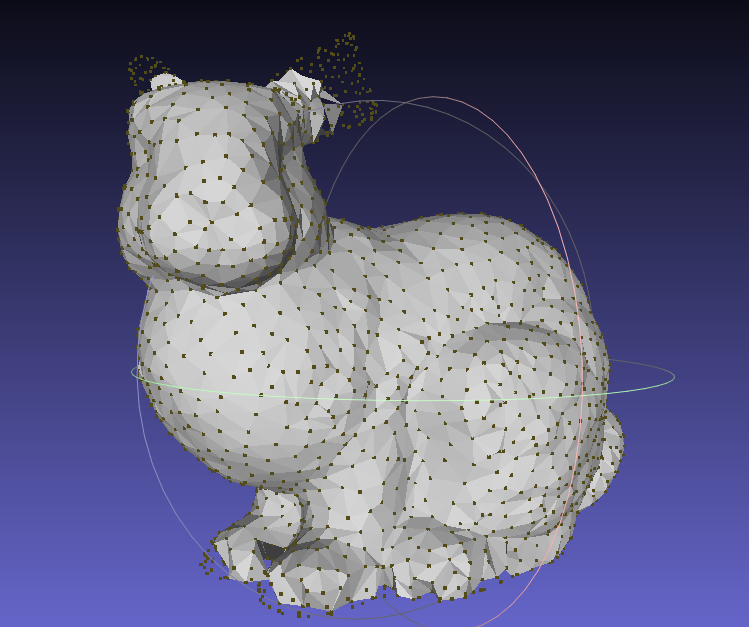

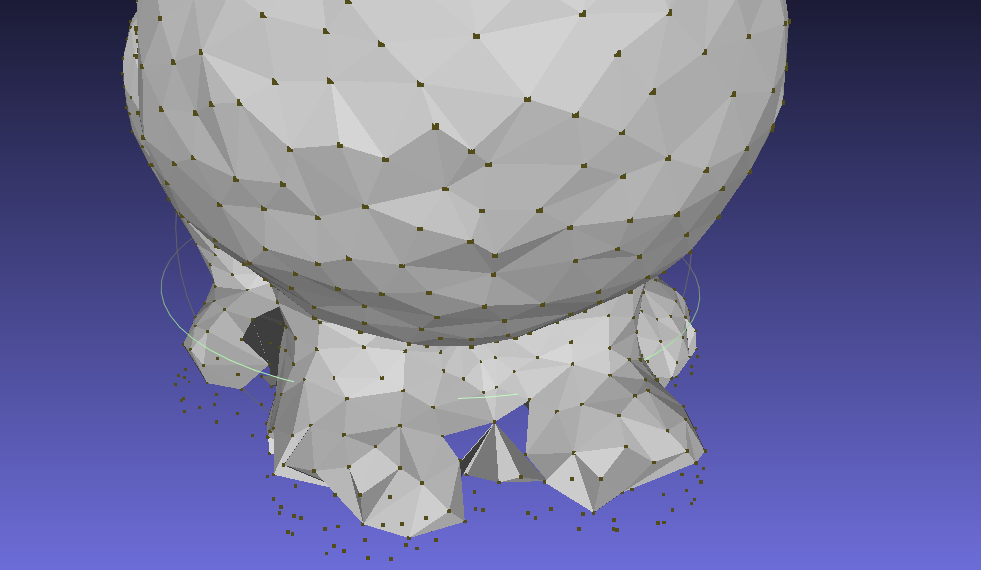

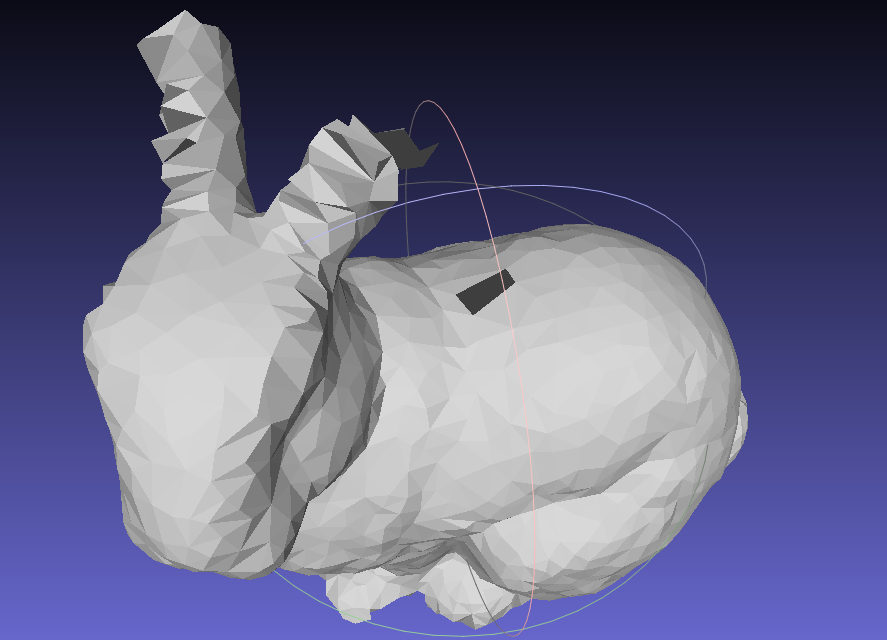

We firstly use the original mesh as input to generate a point cloud with vertex normals as the input of our algorithm, which is shown in the following figures.

|

|

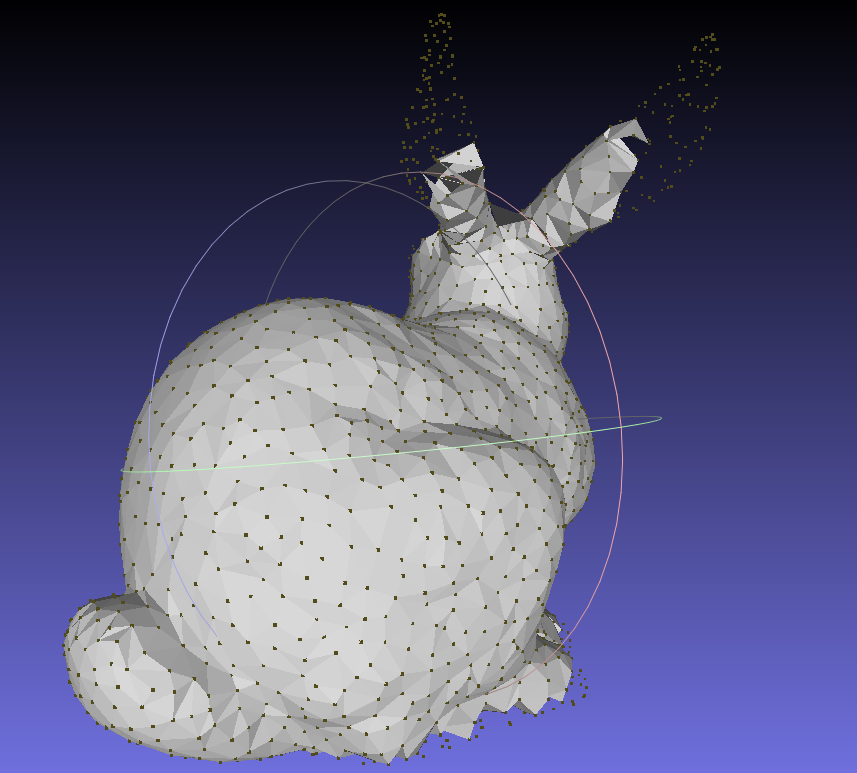

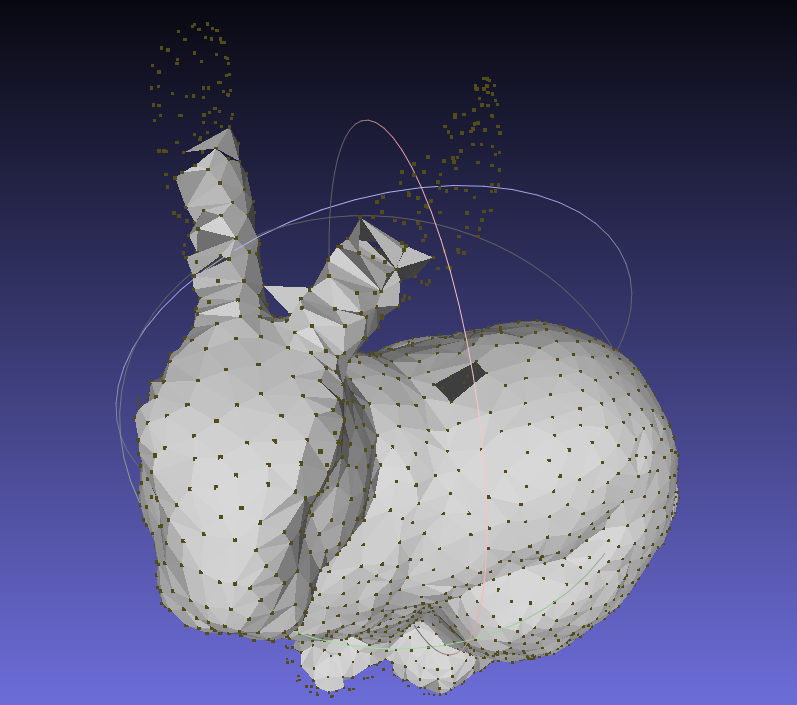

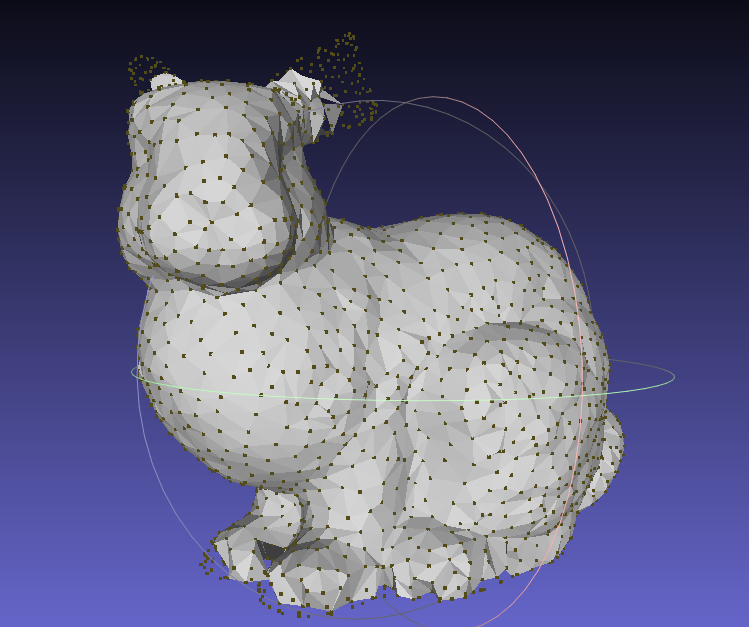

After that, we use the point cloud with vertex normals as input to reconstruct a mesh, which is the most challenging part of this project. The simple idea is that we will use set a hyperparameter r as radius to construct a sphere, which will be "captured" by 3 points, and then we use these points to form a triangular face. Each step by rolling the sphere on the surface of the point cloud, we possibly construct a face (sometimes a face cannot be constructed due to unsatisfied conditions). The following figure shows the result of different number of steps.

|

|

Clearly, with more faces generated, the mesh will be more accurate. But we can find even with 4400 steps where our algorithm converges, we still cannot get a perfect mesh. Compared with the original mesh, we can find that the main body, which is smoother and more continuous, is more easy to reconstruct. While the small details, such as the ears or the feet, are more difficult to reconstruct.

|

|

|

By analysis, we can find the following problems:

|

|

Here is the link of our video and slides: